Hi, I’m Hengyuan!

Hi! I’m Hengyuan Xu, and this site is a documentation of myself. Contact me by email:

doby-xu@outlook.com

Hi! I’m Hengyuan Xu, and this site is a documentation of myself. Contact me by email:

doby-xu@outlook.com

News: WithAnyone is now released! WithAnyone is capable of generating high-quality, controllable, and ID consistent images. Demo is out, try it now!

About Me

I am a Master’s student in FVL at the School of Computer Science, Fudan University, advised by Xingjun Ma. I received my bachelor degree from SEIEE in Shanghai Jiao Tong University and I was a research intern at John Hopcroft Center for Computer Science, advised by Liyao Xiang. Here is my sincere gratitude to my mentors and collaborators. Currently I am interning at StepFun, exploring possibilities in the field of ID-consistency generation.

I am still exploring potential research directions I am interested in. My previous research explores the mathematical properties of Transformers and their potential applications in privacy-preserving deep learning and model intellectual property protections. Recently, I have been focusing on generative AI and its applications.

Publications

- Hengyuan Xu, Wei Cheng, Peng Xing, Yixiao Fang, Shuhan Wu, Rui Wang, Xianfang Zeng, Daxin Jiang, Gang Yu, Xingjun Ma, Yu-Gang Jiang. “WithAnyone: Towards Controllable and ID Consistent Image Generation” arXiv preprint arXiv:2510.14975

- Hengyuan Xu, Liyao Xiang, Xingjun Ma, Borui Yang, Baochun Li. “Hufu: A Modality-Agnostic Watermarking System for Pre-Trained Transformers via Permutation Equivariance” arXiv preprint arXiv:2403.05842

- Xingjun Ma, et al. “Safety at scale: A comprehensive survey of large model safety” arXiv preprint arXiv:2502.05206

-

Cailin Zhuang, et al. “Vistorybench: Comprehensive benchmark suite for story visualization” arXiv preprint arXiv:2505.24862

-

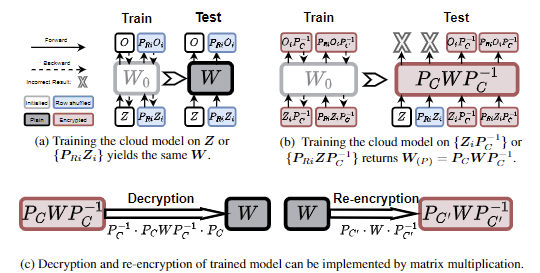

Hengyuan Xu, Liyao Xiang, Hangyu Ye, Dixi Yao, Pengzhi Chu, Baochun Li. “Permutation Equivariance of Transformers and Its Applications” in Proceedings of The IEEE/CVF Conference on Computer Vision and Pattern Recognition 2024 (CVPR)

-

Dixi Yao, Liyao Xiang, Hengyuan Xu, Hangyu Ye, Yingqi Chen. “Privacy-Preserving Split Learning via Patch Shuffling over Transformers” in Proceedings of the 22nd IEEE International Conference on Data Mining (ICDM)

Intern Experience

-

Research Intern, StepFun, Shanghai, China (2024.11 - Present)

- Supported several algorithm development for “Lipu” APP.

- Developed and open-sourced WithAnyone.

- curated million-level paired ID-centric datasets.

- observed and formulated copy-paste patterns in current ID-consistency generation methods with newly developed benchmark

- trained a 6B IPA model on FLUX from scratch and jumped out of trade-off between similarity and diversity.

- Exploring MLLM reflection for TI2I models like editing and cref models.

-

Research Intern, John Hopcroft Center for Computer Science, Shanghai Jiao Tong University, Shanghai, China (2022.11 - 2024.06)

- Worked on privacy-preserving deep learning and model intellectual property protections.

- Explored the mathematical properties of Transformers.